CS1303-Lecture-8

Background

- Virtual memory: separating logical from physical memory

- Separate the processes from each other and from the OS

- Logical address space much larger than physical address space

- Only part of the program needs to be in memory for execution

- Virtual memory can be implemented via

- Paging

- Segmentation

Demand Paging

Demand paging: a paging system with swapping

– To execute a program on storage (disk), swap it into memory

– Instead of swapping in entire program into physical memory, only swaps those pages next to the currently executing instructionA Page Table is used to keep track of pages that are in physical memory (Valid 1) or on disk (Invalid 0)

– If the page is not currently in physical memory, then the address in back storage is also stored in Page TableIf the program tried to use a page not in memory, a Page Fault trap will occur (caused by the invalid bit in Page Table)

Demand paging brings a page into memory only when needed

– Less I/O needed

– Less memory needed

– Faster response

– More users supportedA page is needed -> reference to it

– invalid reference -> abort

– not-in-memory -> bring to memory

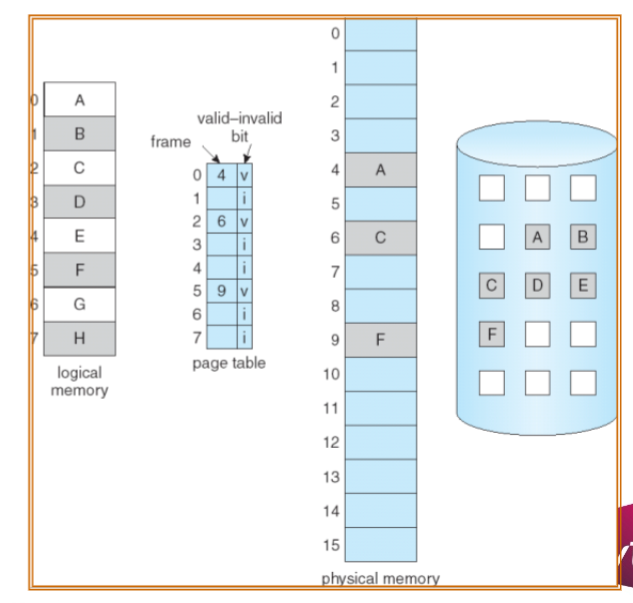

Valid-Invalid Bit

- A valid–invalid bit is associated with each page table entry

– v(1) -> in-memory

– i(0) -> not-in-memory - Initially set to 0 on all entries

- During address translation

– valid–invalid bit is 0 -> page fault

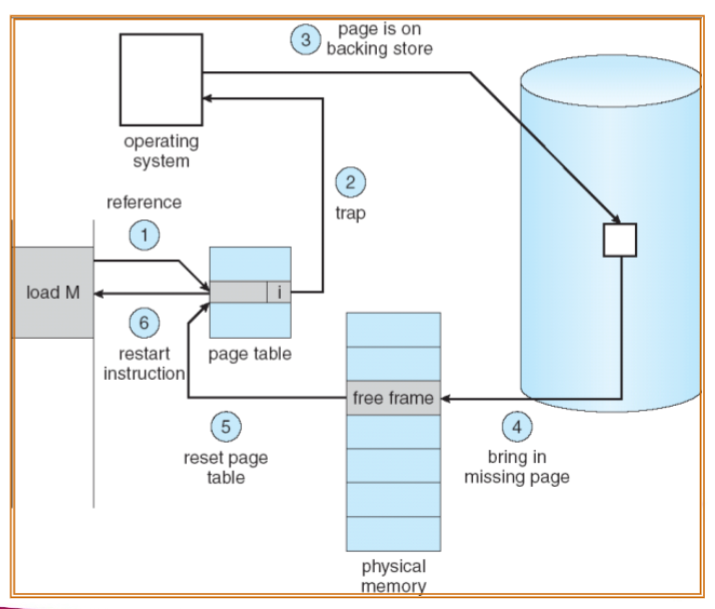

Page Fault

- First reference to a page will trap to OS -> page fault

- Get free frame on memory

- Bring page into frame.

- Reset tables, validation bit = 1

- Restart instruction

Page Replacement

What happens if there is no free frame?

– swappingPage replacement: find some page in memory, but not really in use, swap it out

Replacement algorithm

– Performance: want an algorithm resulting in minimum number of page faultsFind the location of the desired page on disk

Find a free frame:

– If there is a free frame, use it

– If there is no free frame, use a page replacement algorithm to select a victim frameRead the desired page into the (newly) free frame

Update the page table

Restart the process

Performance

- Page Fault Rate 0 <= p <= 1

– if p = 0 no page faults

– if p = 1, every reference is a fault - Effective Access Time (EAT)

EAT = (1 – p) x memory_access_time + p x page_fault_service_time - Example

– Assume: Memory access time = 200 nanoseconds, Page fault rate = p, page fault service time = 8 milliseconds

– EAT = (1 – p) x (200) + p (8 milliseconds) = 200 + 7,999,800 x p - EAT ~ p

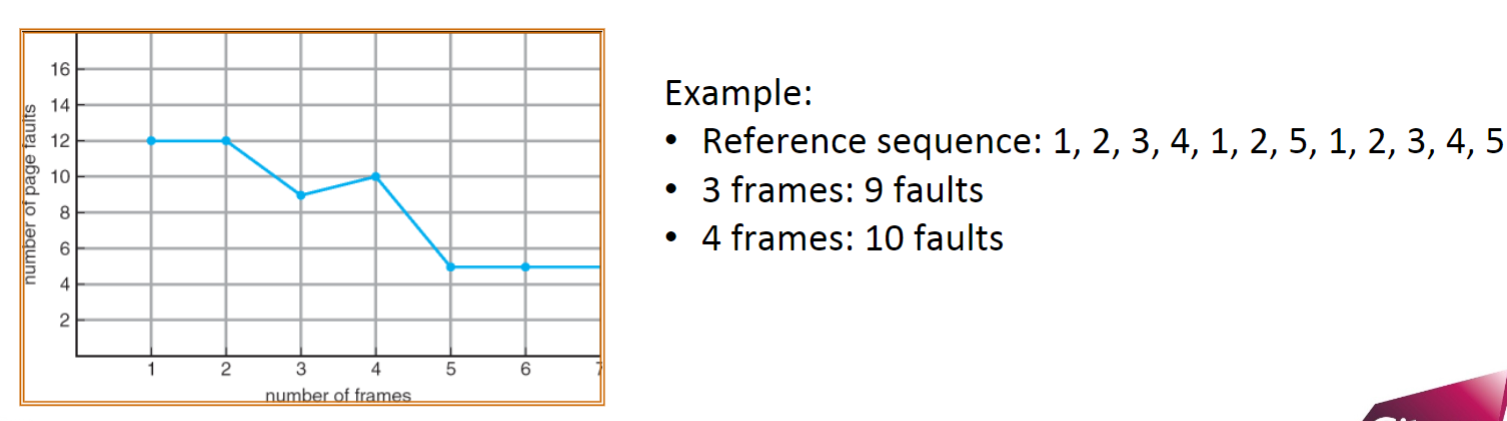

Page Faults vs The Number of Frames

- In general, larger number of frames -> fewer page faults

Optimal Algorithm

Replace page that will be unused for longest period of time in the future

Example:

- Reference sequence:

- Reference sequence:

Not a realistic algorithm in practice

- Hard to know which one will be unused for longest time

For measuring how well a realistic algorithm performs

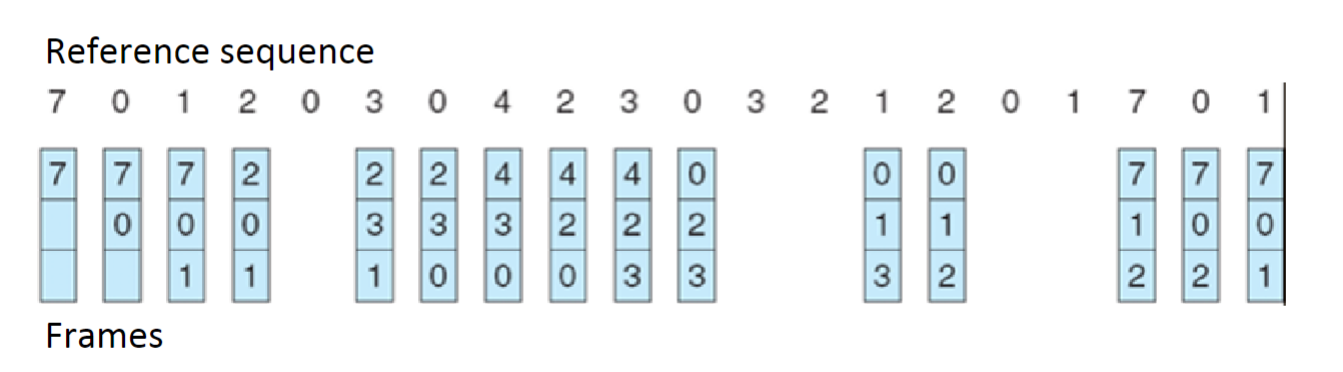

FIFO Algorithm

FIFO (First-In-First-Out): replaces earliest loaded page

Example:

In general, more frames -> fewer page faults

Belady’s Anomaly: sometimes, more frames may lead to more page faults

LRU Algorithm

LRU (Least Recently Used): replaces pages that have not been used for the longest time

– Generally good algorithm and frequently used

– Do not suffer Belady’s anomalyExample:

Counter-based implementation

– every page table entry has a counter

– every time page is referenced, copy the clock into the counter

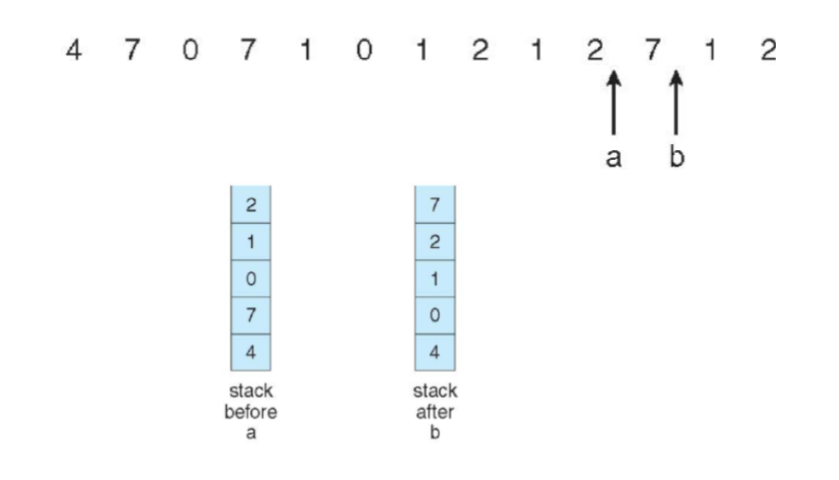

– search for page with smallest counter to replaceStack-based implementation

– keep a stack of page numbers (in double linked list)

– when a page is referenced, move it to the top of the stack

– update is more expensive, but no need to search for replacementStack-based implementation example

Counting-Based Algorithm

- Keep the number of references made to each page

- LFU (Least Frequently Used) replaces page with smallest counter

– Problem: what if a page is heavily used during process initialization and then never used? - MFU (Most Frequently Used) replaces page with largest counter

– based on the argument that page with the smallest count was probably just brought in and has yet to be used

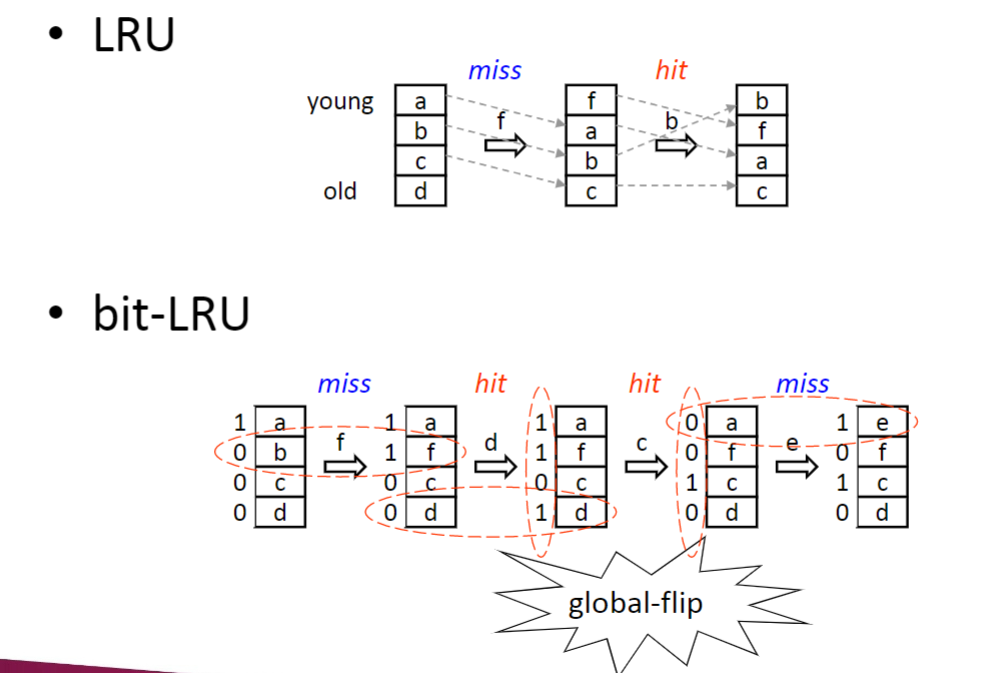

LRU Approximation

- Counter-based and stack-based LRU have high overhead

- LRU approximation with a reference bit (bit-LRU)

– associate with each page a reference bit, initially set to 0

– when page is referenced, set the bit to 1

– replace some page with reference bit = 0 (if one exists), and set bit=1

– when there is only one bit=0, do a global flip

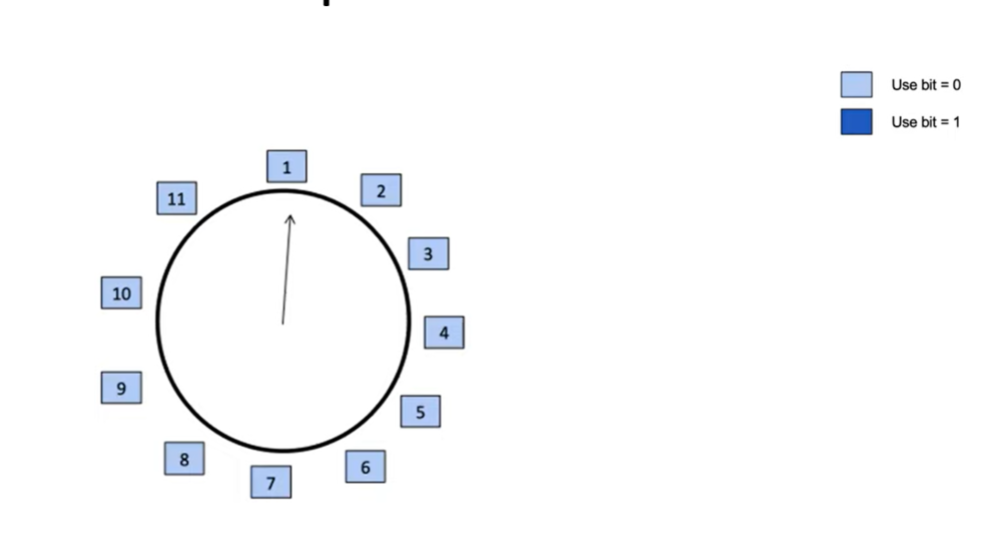

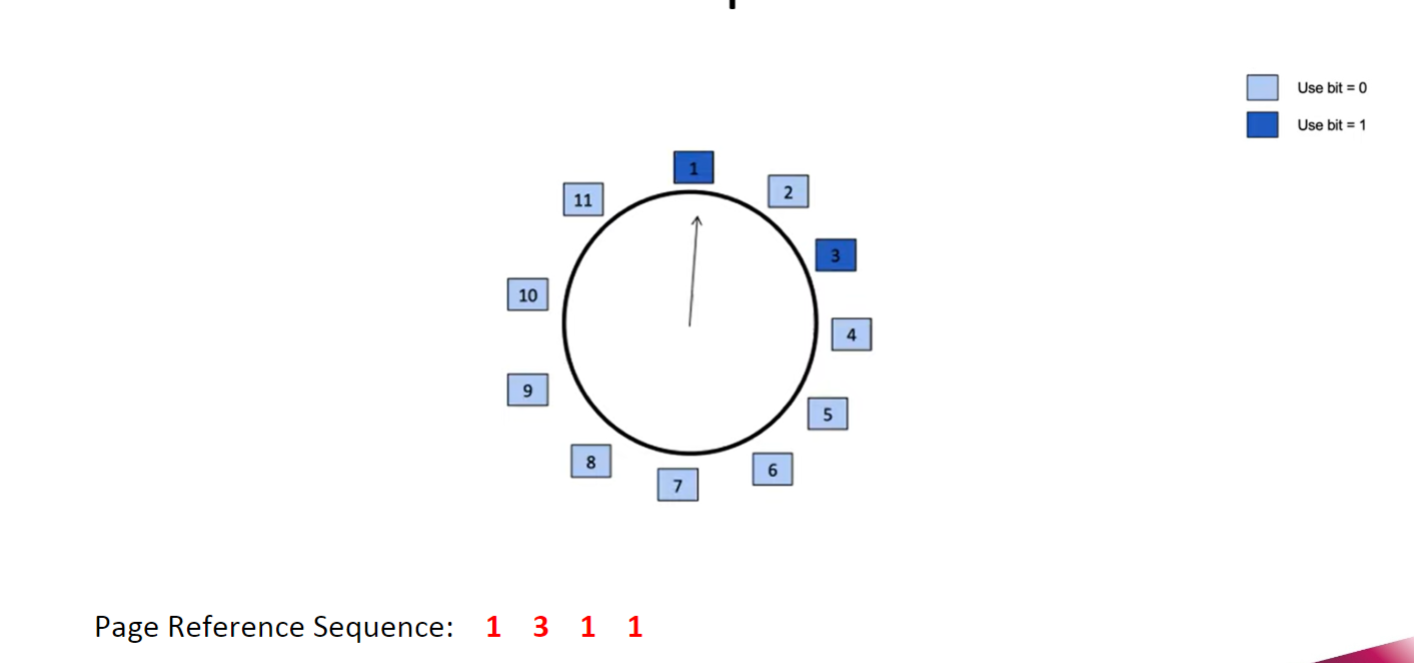

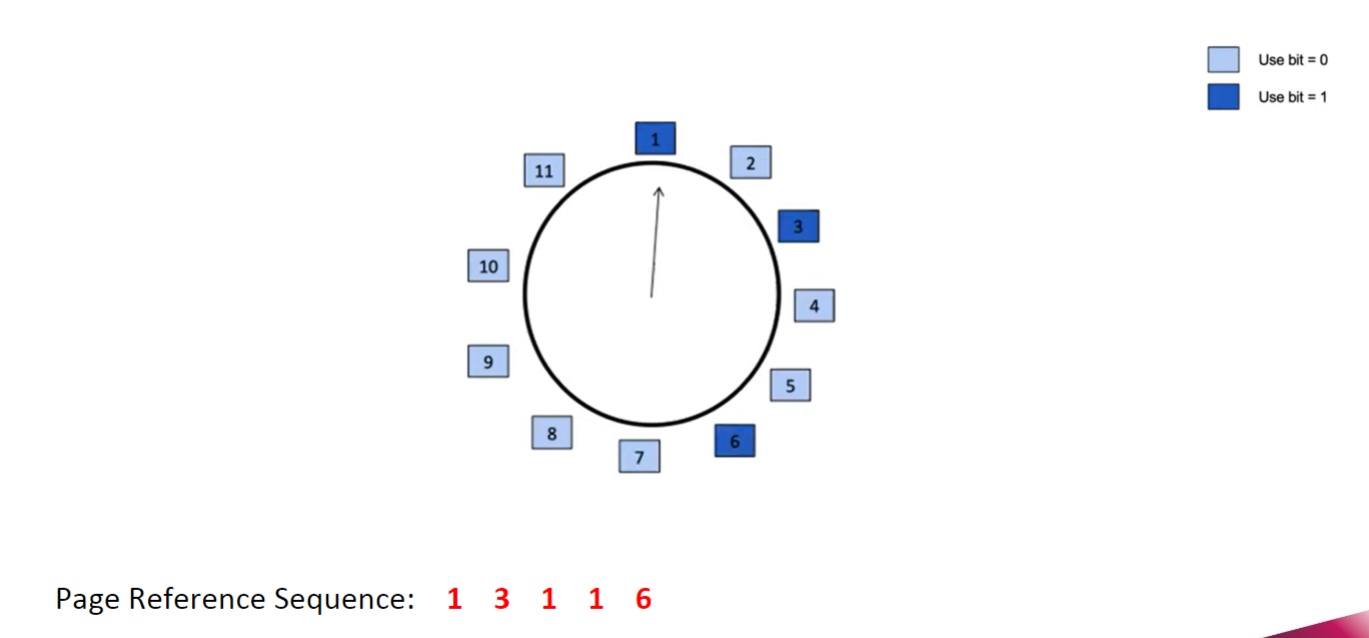

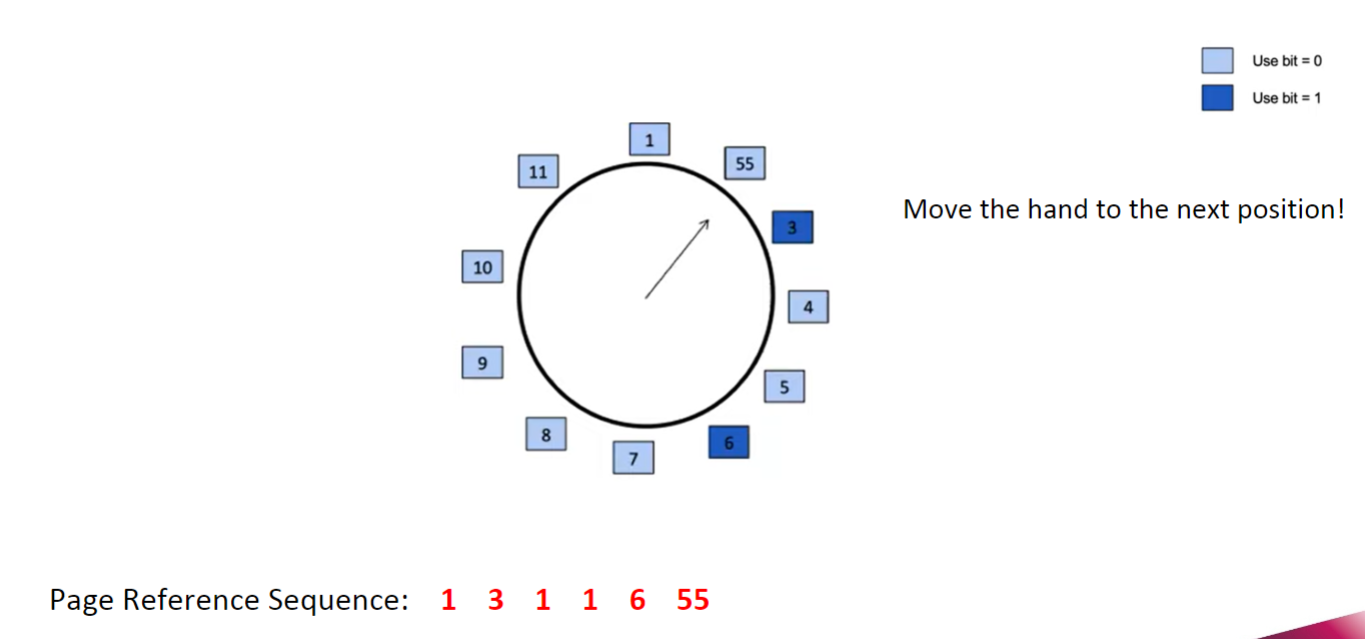

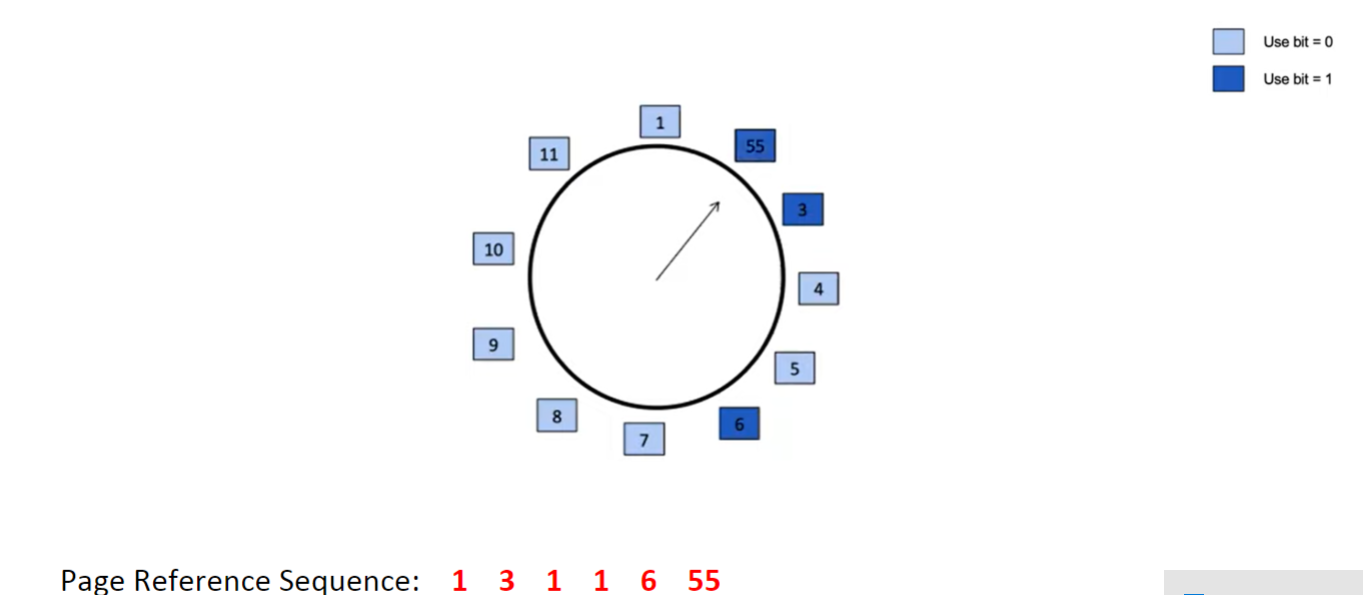

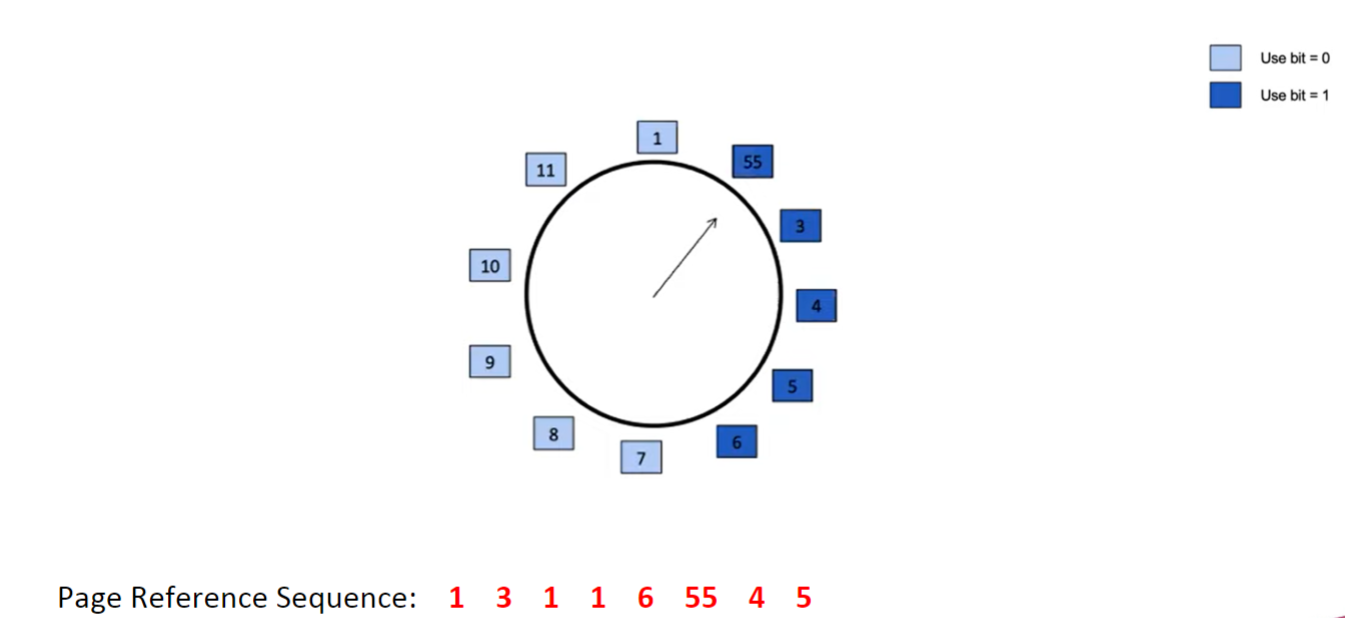

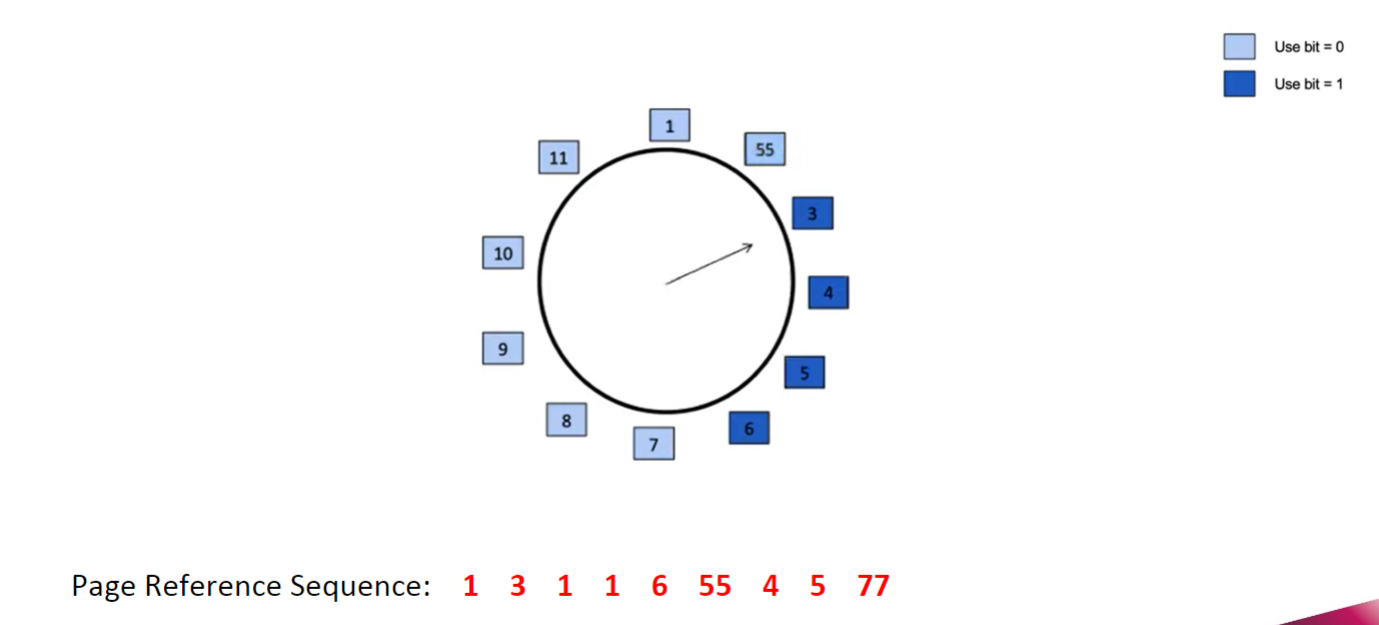

Second-Chance(Clock) Replacement Algorithm

- Another approximation of LRU

- Each page has a reference bit, initially 0

– When a page is referenced, the bit is set to be 1

– To replace a page, check the reference bit - If currently is 0, then it can be replaced

- If currently is 1, then it is changed to be 0

- All pages are linked as a circle

- Visit all pages in the circular order

Example

Allocation of Frames

How many frames should be allocated to each process

Allocation schemes

- Equal allocation

- Propositional allocation

- Priority akkication

- …

Equal allocation

- For example, if there are 100 frames (after allocating frames for the OS) and 5 processes, give each process 20 frames

- Issue: But jobs use memory uneually

Proportional allocation

- Allocate accroding to process size

- How do you determine process size?

Priority allocation

- give high priority process more memory than low priority process

Replacement Scopes

Local replacement

- Each process selects from only its own its own set of allocated frames

- Process slowed down even if other less used pages of memory are avaiable

Global repalcement

- Process selects replacement frame from set of all frames

- One process can take a frame from another

- Process may not be able to control its page fault rate

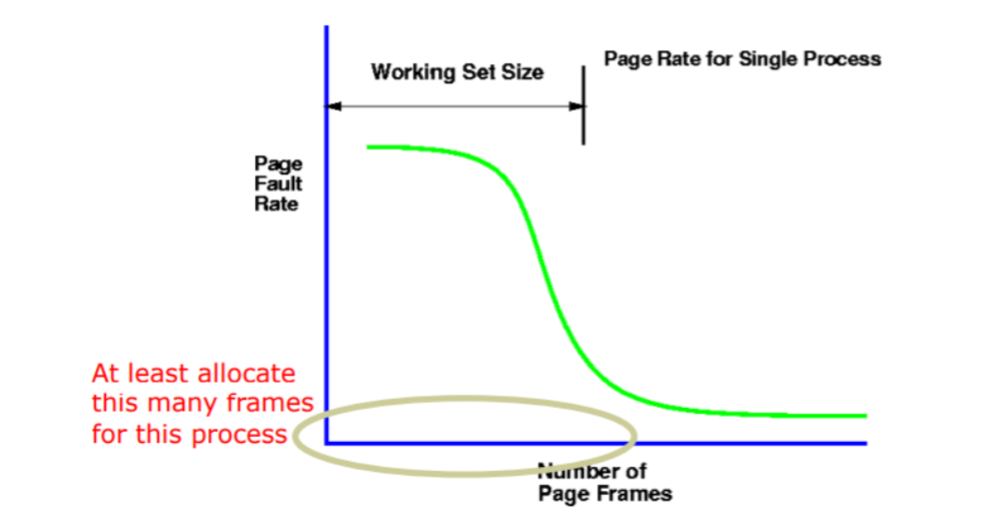

Thrashing

Thrashing: a process is busy swapping pages in and out, i.e. spending a lot of time in paging rather than executing

If a process does not have “enough” pages, page-fault rate is high

- For example

- Process has 3 frames allocated to it(sue LRU)

- Reference sequence is 123412341234

- ALl cause page faulsts

- For example

Higher mutiprogramming degree may lead to lower CPU utilization due to thrashing

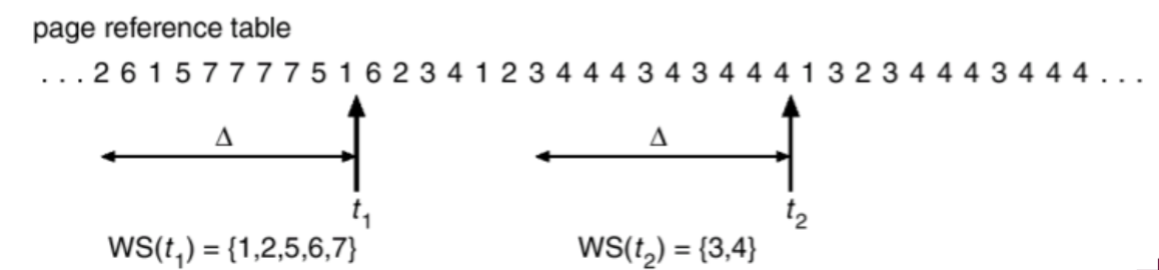

Working set

How much memory needed to keep the most recent computation in memory with few page faults

- The principle of locality

- A program clusters its access to data and text temporally

- A recently accessed page is more like ly to be accessed again

- Need to know the set of pages process to avoid thrashing

- Requires knowing the future

- Working set: Pages referenced in certain amount of time in the past

- Approximates locality

- The principle of locality

Working-set window △, which can be

- A certain time intervalm or

- Fixed number of page references

- Example: 10,000 page references

Working set of a process Pi = page referenced in most recent △

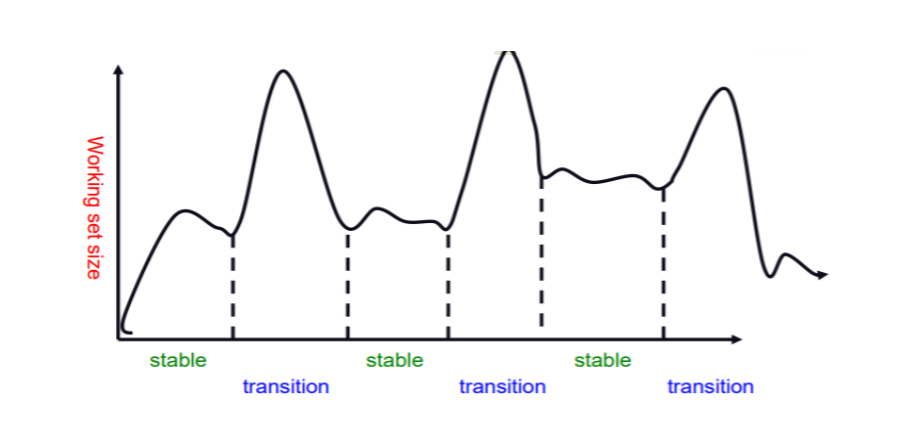

Working set size

Typical programs have phasess

Prepaging

- Prepage all or some of the pages that a process will need,before they are referenced

- To reduce large number of page faults at process startup

- If prepaged pages are unused, I/O and memory was wasted

- Assume s pages are prepaged and α of the pages is used

– Cost of s * α save pages faults larger or smaller than the cost of prepaging

– s * (1- α) unnecessary pages?

– Smaller α -> more prepaging loses